The Singularity is Near!

When commentators and newscasters talk about the 'existential risks' of AI what exactly do they mean? It turns out its way more crazy and scary than you might think.

Existential risks

Lately, it seems that dire warnings about the “existential risks” of AI are everywhere. AI executives and researchers are hastily abandoning their well paid, fascinating jobs, driven to madness and guilt by the notion that they may have set in motion an inevitable path to human extinction. As Oppenheimer quoted from Hindu scripture, upon witnessing the detonation of the first nuclear bomb:

Now I am become Death, the destroyer of worlds.

When the BBC 10 o'clock news began recently with a dire warning, from the usually unexcitable newscaster Huw Edwards that AI was a threat to humanity on a par with nuclear weapons, I spat out my tea. Not because I thought this was all overblown scaremongering. Rather it was because it all reminded me of a book I read fifteen or so years ago, that predicted everything that is now happening - and much more besides.

So before you read on, grab a stiff drink and strap yourselves in, because I'm afraid to say that the subject is actually far more terrifying than the dire warnings might have made you think.

The singularity is near

The book I read, back in the early 2000s was called "The Singularity is Near," by an American computer scientist, inventor and futurist called Ray Kurzweil. It’s lengthy, dense, and filled with numerous peculiar and fascinating ideas. But the core thesis is clear and it emerges in the first couple of chapters.

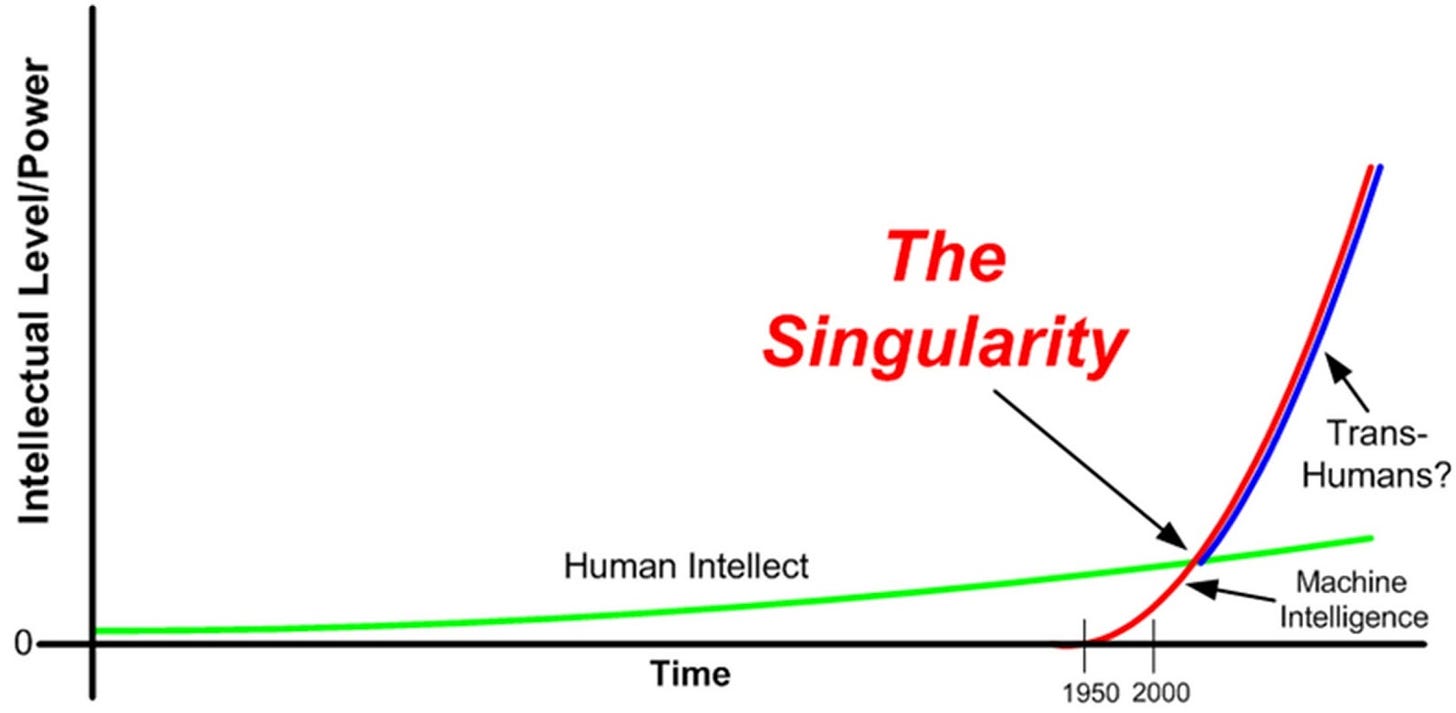

Kurzweil describes an accelerating trend of order and intelligence that extends back to the birth of the universe. Starting from fundamental physics and chemistry and the formation of celestial bodies, he postulates that the universe has raced along an inevitable path of increasing structure and intelligence. In particular, in recent years the human gifts of language and tool building have enabled vast technological progress, complex machinery and sophisticated supercomputers. He argues that this is the mere beginning though. Further developments will continue to compound, in an ever-accelerating feedback loop of advancement.

This idea quickly becomes terrifying. He postulates an inflection point: ‘The singularity’ of the book’s title. This is described as an exponential growth in progress, at the moment when computers surpass human intelligence - in all ways we think of as human - and a new type of being is invented: A trans-human.

Humanity superseded

According to Kurzweil, advancements in AI, neuroscience, nanotechnology), and biological engineering will inevitably lead to the merging and supplementation of human intelligence with enhanced computer-based intelligence. These trans-humans will supersede humanity and the exponential growth in intelligence will continue. Kurzweil hypothesises that humanity's unique destiny therefore lies in it being the first entity in history to evolve itself by inventing the thing that comes after it.

So his ideas go well beyond the notion of computers outperforming humans at work tasks, and current, fathomable, concerns like the vulnerability of transport networks, power grids, financial networks, and military operations to AI threats. The medium term development he proposes as an inevitability is no less than the obsolescence of humanity itself.

Taking over the asylum

I was initially able to dismiss this book, after some existential angst and catastrophising of my own, for two reasons. The first was that, initially, I observed that the predicted timescales in Kurzweil’s book seemed way off. The second was that he seemed to me to be more than a little delusional. The reason why he wasn’t terrified by his own theories was that he was desperate to be one of the first trans-humans himself. At the same time as I was reading his book Kurzweil was taking two hundred and fifty different supplements and drinking ten glasses of alkaline water, ten cups of green tea and multiple glasses of red wine each day in an effort to “reprogram” his biochemistry. He was seeking to stay alive long enough to reap the technological life extension he saw as imminent, while insisting that in the future, everyone would live forever. So, his driver was simply that age old folly of the fountain of eternal youth.

The concepts in Kurzweil’s book hovered around my head like some vague bad dream for the next few years. Then, in 2012, the hairs on the back of my neck stood up when I read that Google had recruited Ray as its Director of Engineering. to lead its ‘Manhattan Project’ of AI. His vision of supplanting humanity was unchanged, and he was now in a position to steer the phenomenal resources of one of history’s largest ever companies to realise it. In particular he was boldly stating that computers would be cleverer than humans by 2029. And he explained a key first step that he was working on:

…we would like to actually have the computers read. We want them to read everything on the web and every page of every book, then be able to engage an intelligent dialogue with the user to be able to answer their questions.

An implausible claim at the time, but something that Kurweil directed the resources of Google towards relentlessly in its work on DeepMind. Of course many of us have now personally engaged with this breakthrough through the use of Microsoft’s competitor technology, ChatGPT.

So, the last six months have shown the world that Kurzweil was right on this, as he has been on many many other things. A study estimated that his predictions are correct about 86 percent of the time. What are the chances that he’s right about all of it? Even the slim chance of a trans-human dictator or terrorist wreaking havoc in the world seems too much to me.

As Schopenhauer said:

Everyone takes the limits of his own vision for the limits of the world.

Ray Kurzweil (and a few others like him) may or may not be delusional. But they still seem to have a very rare gift to see further into the future than the rest of us. And as most of us are now reaching the blurry edges of our ability to understand the possibilities here, that is a powerful gift indeed.

The (near) future

So where does all of this leave us? My mammalian brain doesn’t have any long-term answers: How could it when I’m increasingly obsolete, and scheduled to go the way of the dinosaurs? Certainly there is a race against time to seek to regulate and control these technologies in case we actually are on a path to become the prisoners of our own inventions.

So, before I’m completely adrift in this sea of catastrophe there is at least one practical principle that is clear to me: a possible anchor to steady us. We must take a precautionary approach to the obvious safety risks of AI. Therefore, all engineered systems that can fail in a fatal way need to be segregated from AI and networked communications as a matter of principle. They must be unreachable by the complex telecommunications and computer systems that we are racing to install for short term convenience. We accept these constraints for nuclear weapons or, say, the emergency brakes of passenger trains. Well, pretty much any function of an engineered asset that could fail in a fatal way needs to be treated in the same way. That’s the most practical way to mitigate a number of the possible risks that lie ahead, existential or otherwise.

I’m keen to build the network for Tech Safe Transport. If you know anyone who is interested in the safety of modern transport technology, and who likes a thought provoking read every few weeks, please do share a link with them.

Thanks for reading

All views here are my own. Please feel free to feed back any thoughts or comments and please do feel free to drop me an e-mail on george.bearfield@ntlworld.com.

My particular area of professional interest is practical risk management and assurance of new technology. I’m always keen to engage on interesting projects or research in this area.